Termpaper Proposal

DUE Dates:

Draft (10 points): October 25, 2019, 11:59 PM

Final (15 points): November 1, 2019, 11:59 PM

1. Topics

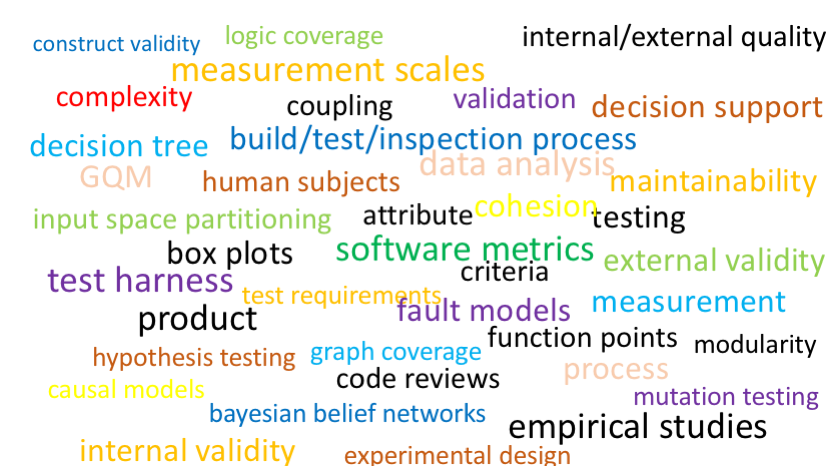

Before you write your draft proposal, discuss your topic area and plans with me. Feel free to stop by my office (on-campus students) or send me email (distance students). Topics include, but are not limited to, the following:

- Testing VR/AR applications

- Data quality testing

- Data warehouse testing

- Regression test selection

- Automatic program repair

- Fault localization

- Mutation testing

- Test input generation

- GUI testing

- Applying techniques you learned in class to an open source project

1.1. Testing VR/AR applications

Advances in virtual reality (VR) and augmented reality (AR) headsets, such as HTC Vive (VR headset), Microsoft HoloLens (AR headset), and Magic Leap One (AR headset) have paved the way for new uses in education, urban planning, and automotive assistant. VR and AR headsets will be a dominant paradigm in the future of interactive computing for most types of domains, and people soon will use VR and AR headsets to improve their lives in many areas.

Problem: How do you perform effective and efficient software testing for VR/AR systems? We would like to evaluate to what extent existing GUI testing techniques can work for VR/AR systems. This will identify VR/AR testing challenges that existing GUI testing techniques can't handle.

To create a testbed, we are recommending two options for the student(s): (A) Use an existing VR Architecture education application running in Unreal Engine – OR – (B) Create a new VR environment running either in Unity Game Engine or Unreal Engine (the problem itself may allow a different type of development environment).

References: The following references are a good starting point (but notice that there isn’t a lot about VR/AR software testing):

- Gerhard Reitmayr and Dieter Schmalstieg. 2001. An open software architecture for virtual reality interaction. In Proceedings of the ACM symposium on Virtual reality software and technology (VRST '01). ACM, New York, NY, USA, 47-54. DOI=http://dx.doi.org/10.1145/505008.505018

- Souza ACC, Nunes FLS, Delamaro ME. An automated functional testing approach for virtual reality applications. Softw Test Verif Reliab. 2018;28:e1690. https://doi.org/10.1002/stvr.1690

- Robert J. K. Jacob, Leonidas Deligiannidis, and Stephen Morrison. 1999. A software model and specification language for non-WIMP user interfaces. ACM Trans. Comput.-Hum. Interact. 6, 1 (March 1999), 1-46. DOI=http://dx.doi.org/10.1145/310641.310642

- Andrew Ray and Doug A. Bowman. 2007. Towards a system for reusable 3D interaction techniques. In Proceedings of the 2007 ACM symposium on Virtual reality software and technology (VRST '07), Stephen N. Spencer (Ed.). ACM, New York, NY, USA, 187-190. DOI=http://dx.doi.org/10.1145/1315184.1315219

- Memon, A. , Nagarajan, A. and Xie, Q. (2005), Automating regression testing for evolving GUI software. J. Softw. Maint. Evol.: Res. Pract., 17: 27-64. doi:10.1002/smr.305

- Memon, A. M. (2007), An event‐flow model of GUI‐based applications for testing. Softw. Test. Verif. Reliab., 17: 137-157. doi:10.1002/stvr.364

- GUI testing: Pitfalls and process (http://cvs.cs.umd.edu/~atif/papers/MemonIEEEComputer2002.pdf)

- GUI ripping: Reverse engineering of graphical user interfaces for testing (http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.122.5392&rep=rep1&type=pdf)

- Atif M. Memon, Mary Lou Soffa, and Martha E. Pollack. 2001. Coverage criteria for GUI testing. In Proceedings of the 8th European software engineering conference held jointly with 9th ACM SIGSOFT international symposium on Foundations of software engineering (ESEC/FSE-9). ACM, New York, NY, USA, 256-267. DOI: https://doi.org/10.1145/503209.503244

- R. K. Shehady and D. P. Siewiorek, "A method to automate user interface testing using variable finite state machines," Proceedings of IEEE 27th International Symposium on Fault Tolerant Computing, Seattle, WA, USA, 1997, pp. 80-88. doi:10.1109/FTCS.1997.614080 http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=614080&isnumber=13409

- C. A. Wingrave and D. A. Bowman, "Tiered Developer-Centric Representations for 3D Interfaces: Concept-Oriented Design in Chasm," 2008 IEEE Virtual Reality Conference, Reno, NE, 2008, pp. 193-200. doi: 10.1109/VR.2008.4480773 http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=4480773&isnumber=4480728

1.2. Data quality testing

Enterprises use data stores such as databases and data warehouses to store, manage, access, and query the data. The quality and correctness of the data are critical to every enterprise. Records get corrupted because of how the data is collected, transformed, and managed, and because of malicious activity. Incorrect records may violate constraints pertaining to attributes. Constraints can exist over single attributes (patient_age >= 0) or multiple attributes: (pregnancy_status = true => patient_gender = female). Moreover, these constraints can exist over records involving time series and sequences. For example, \textit{patient\_weight} growth rate over time must be positive for the records pertaining to every infant.

Inaccurate data may remain undetected and cause incorrect decisions or produce wrong research results. Thus, rigorous data quality testing approaches are required to ensure that the data is correct. Data quality tests validate the data in data stores to detect violations of syntactic and semantic constraints. Data quality tests rely on the accurate specification of constraints.

Current data quality test approaches check for violations of constraints defined by domain experts, who can miss important constraints. Tools that automatically generate constraints only check for trivial constraints, such as the not-null check. Existing AI-based techniques generally report the faulty records but do not determine the constraints that are violated by those records.

Problem: How do you perform systematic data quality testing techniques that automatically (1) discover the constraints associated with data, (2) generate test cases from the discovered constraints, and (3) determine the constraints that are violated by the reported faults?

Tasks:

- Perform different unsupervised machine learning algorithms for constraint discovery in the data, and evaluate the fault detection and constraint discovery effectiveness of those algorithms.

- Evaluate the data quality test tool using datasets from different domains.

- Perform different explainable machine learning algorithms to determine the constraints that are violated by the detected faults.

References:

- H. Homayouni, S.Ghosh, I. Ray, M. Kahn. An Interactive Data Quality Test Approach for Constraint Discovery and Fault Detection, accepted to IEEE Big Data, Los Angeles, December 2019.

- H. Homayouni, S. Ghosh, and I. Ray. ADQuaTe: An Automated Data Quality Test Approach for Constraint Discovery and Fault Detection, Proceedings of the IEEE 20th International Conference on Information Reuse and Integration for Data Science, Los Angeles, USA, July 30-August 1, 2019. Link

- H. Homayouni, GitHub Repo Use the master branch for non-time-series data. The working branch should work for both time_series and non-time series data.

- H. Homayouni, S. Ghosh, I. Ray, “Using Autoencoder to Generate Data Quality Tests“, Rocky Mountain Celebration of Women in Computing (RMCWIC), Denver, USA, November 2 – 3, 2018.

- G. O. Campos, A. Zimek, J. Sander, R. J. G. B. Campello, B. Micenkov, E. Schubert, I. Assent, and M. E. Houle, “On the Evaluation of Unsupervised Outlier Detection: Measures, Datasets, and an Empirical Study,” Data Mining and Knowledge Discovery, vol. 30, no. 4, pp. 891–927, 2016.

- M. Mahajan, S. Kumar, and B. Pant, “A Novel Cluster based Algorithm for Outlier Detection,” in Computing, Communication and Signal Processing, B. Iyer, S. Nalbalwar, and N. P. Pathak, Eds. Springer Singapore, 2019, pp. 449–456.

- R. Domingues, M. Filippone, P. Michiardi, and J. Zouaoui, “A Comparative Evaluation of Outlier Detection Algorithms: Experiments and Analyses,” Pattern Recognition, vol. 74, pp. 406–421, 2018.

1.3. Data warehouse testing

Enterprises use data warehouses to accumulate data from multiple sources for analysis and research. A data warehouse is populated using the Extract, Transform, and Load (ETL) process that (1) extracts data from various source systems, (2) integrates, cleans, and transforms it into a common form, and (3) loads it into the target data warehouse. ETL processes can use complex one-to-one, many-to-one, and many-to-many transformations involving sources and targets that use different schemas, databases, and technologies. Since faulty implementations in any of the ETL steps can result in incorrect information in the target data warehouse, ETL processes must be thoroughly validated.

Problem: How do you perform effective and efficient software testing for ETL processes? You can validate ETL processes using automated balancing tests that check for discrepancies between the data in the source databases and that in the target warehouse.

Tasks:

- Formalize property definitions by considering all possible source-to-target mappings between tables, attributes, and records and define the properties that need to be validated for each mapping type.

- Evaluate the balancing test approach that we developed on other application domains. Generate balancing tests for an application domain by (1) automatically generating the source-to-target mappings from ETL transformation rules provided in the specifications and (2) using these mappings to automatically generate balancing test assertions corresponding to each property. The assertions must compare the data in the target data warehouse with the corresponding data in the sources to verify the properties.

- Evaluate the fault detection effectiveness of the balancing approach to demonstrate that (1) the approach can detect previously undetected real-world faults in the ETL implementation and (2) the generated assertions are strong enough to detect faults injected into the data using a set of mutation operators.

References:

- H. Homayouni, S. Ghosh, I. Ray, 2018. “An Approach for Testing the Extract-Transform-Load Process in Data Warehouse Systems”. In 22nd International Database Engineering & Applications Symposium, Villa San Giovanni, Italy, pp. 236–245.

- H. Homayouni, 2018. “Testing Extract-Transform-Load Process in Data Warehouse Systems”. In Doctoral Symposium section of the 29th IEEE International Symposium on Software Reliability Engineering, Memphis, USA.

- Neveen ElGamal, Ali El Bastawissy, and Galal Galal-Edeen. 2012. Towards a Data Warehouse Testing Framework. In 9th International Conference on ICT and Knowledge Engineering. 65–71.

- Matteo Golfarelli and Stefano Rizzi. 2009. A Comprehensive Approach to Data Warehouse Testing. In 12th ACM International Workshop on Data Warehousing and OLAP. New York, USA, 17–24.

- Matteo Golfarelli and Stefano Rizzi. 2011. Data Warehouse Testing: A Prototype-based Methodology. Information and Software Technology 53, 11 (2011), 1183– 1198.

1.4. Regression test selection

Possible projects include evaluating and comparing state-of-the-art regression test selection tools as well as developing new ideas for regression test selection using NLP and machine learning techniques, or for new domains (e.g., web testing).

Here is a survey paper on regresstion test minimization, selection and prioritization.

- Shin Yoo, Mark Harman: Regression testing minimization, selection and prioritization: a survey. Softw. Test., Verif. Reliab. 22(2): 67-120 (2012) Link.

1.5. Automatic program repair

- Functionality related faults

- Performance bugs

Here is a source for information on automatic program repair.

- Martin Monperrus. “Automatic Software Repair: a Bibliography”, Technical Report #hal-01206501, University of Lille. 2015. Link.

1.6. Fault localization

- Functionality related faults

- Performance bugs

Here is a survey on fault localization.

- W. Eric Wong, Ruizhi Gao, Yihao Li, Rui Abreu, Franz Wotawa: A Survey on Software Fault Localization. IEEE Trans. Software Eng. 42(8): 707-740 (2016) Link

1.7. Mutation testing (including higher order mutation)

You can focus on any of the following areas of mutation testing. You will have to search for relevant papers.

- Applying mutation tsting to a new area: Web programming

- Efficient generation of mutants

- Subsumption relationships between mutants/mutant classes

- Automatically detecting equivalent mutants

- Test case generation to kill mutants

Here is a survey paper on mutation testing techniques.

- Yue Jia, Mark Harman. An Analysis and Survey of the Development of Mutation Testing. IEEE Trans. Software Eng. 37(5): 649-678 (2011) Link

- Mike Papadakis, Marinos Kintis, Jie Zhang, Yue Jia, Yves Le Traon and Mark Harman. Mutation Testing Advances: An Analysis and Survey. Advances in Computers, Volume 112 1st Edition, 2019. Link

1.8. Test input generation

- Dynamic symbolic execution

- Search-based testing

Here is a paper on the use of symbolic execution for software testing. In some of the lectures we studied other techniques for test input generation.

- Cristian Cadar, Koushik Sen: Symbolic execution for software testing: three decades later. Commun. ACM 56(2): 82-90 (2013). Link.

1.9. GUI Testing

- Android apps (or mobile apps in general)

- Web application testing

- UI testing

Here are some papers:

- Domenico Amalfitano, Nicola Amatucci, Atif M. Memon, Porfirio Tramontana, Anna Rita Fasolino: A general framework for comparing automatic testing techniques of Android mobile apps. Journal of Systems and Software 125: 322-343 (2017) Link

- Bao N. Nguyen, Bryan Robbins, Ishan Banerjee, Atif M. Memon: GUITAR: an innovative tool for automated testing of GUI-driven software. Autom. Softw. Eng. 21(1): 65-105 (2014) Link

1.10. Applying techniques you learned in class to an open source project Atunes

ATunes is an open source project. Select version 1.6 and improve the level of testing. You will write tests using JUnit after creating new test cases using the techniques you learned in this class. You will measure code coverage and mutation score, and attempt to raise both of them. You will run regression test cases on the next version and apply a regresstion test selection tool to reduce the number of test cases.

2. Proposal document

The proposal must be 2-3 pages long, 11 pt font size, single column, and single spaced.

The proposal must have a title, author name and affiliations, and date at the top.

If you are proposing a new approach, then the body of the proposal should cover the following topics in numbered sections (though the list below need not match the section titles).

- The background of the problem

- A statement of the problem

- Proposed approach of the problem

- Proposed evaluation plan with specific metrics

- Tasks and tools to be used

- Schedule to be followed

- References (2-3 to begin with)

If you are comparing approaches or applying approaches in a new setting (e.g., your work/research group), the structure will be a little different.

- The background of the problem

- A statement of the problem

- Approach(es) to be used to solve the problem

- Proposed evaluation or comparison plan with specific metrics

- Tasks and tools to be used

- Schedule to be followed

- References (2-3 to begin with)

3. Submission

Submit a document called EID_proposal.pdf. For example, I would submit my proposal as ghosh_proposal.pdf. Submit the file using Assignment Submission in Canvas. Two separate submissions will be set up for the draft and final versions. Draft submissions must be made by March 25, 2019. You will get feedback from the instructor. The final version must be submitted by March 30, 2019.