Human-AI Collaboration and Group Dynamics

Description:

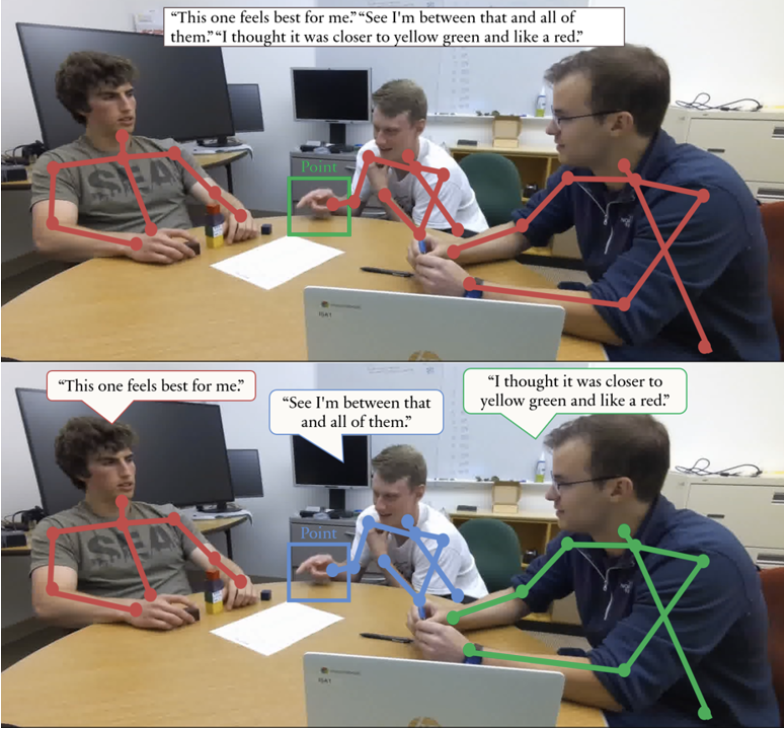

In co-situated collaborative groups, a challenge for automated interpretation of group dynamics is parsing and attributing input from individual group members to process their respective perspectives and contributions. In this work, we describe the necessary components for such a system to handle multimodal, multi-party input. We apply these methods over an audiovisual dataset of a co-situated collaborative task called the Weights Task Dataset (WTD) to track individual beliefs regarding the task. We find that combining audiovisual speaker detection (ASD) with utterance transcripts enables us to track individuals' beliefs during a task. We show that our system succeeds in individual belief tracking, achieving scores similar to those seen in dense-paraphrased common ground tracking. Further, we demonstrate that a combination of ASD and point target detection can be applied to transcripts for automated dense paraphrasing. We additionally identify where individual components need to be improved, including ASD and task-belief identification.

Publications:

Venkatesha, V., Nath, A., Khebour, I., Chelle, A., Bradford, M., Tu, J., VanderHoeven, H., Bhalla, B., Youngren, A., Pustejovsky, J., Blanchard, N., & Krishnaswamy, N. (2025). Propositional Extraction from Collaborative Naturalistic Dialogues [DOI]

Nath, A., Venkatesha, V., Bradford, M., Chelle, A., Youngren, A. C., Mabrey, C., Blanchard, N., & Krishnaswamy, N. (2024). "Any Other Thoughts, Hedgehog?" Linking Deliberation Chains in Collaborative Dialogues. arXiv preprint arXiv:2410.19301. [DOI]

VanderHoeven, H., Bradford, M., Jung, C., Khebour, I., Lai, K., Pustejovsky, J., & Blanchard, N. (2024). Multimodal Design for Interactive Collaborative Problem-Solving Support. In International Conference on Human-Computer Interaction (pp. 60–80). Springer. [DOI]

VanderHoeven, H., & Blanchard, N., & Krishnaswamy, N. (2024). Point Target Detection for Multimodal Communication. In International Conference on Human-Computer Interaction (pp. 356–373). Springer. [DOI]

Nath, A., Jamil, H., Ahmed, S. R., Baker, G., Ghosh, R., Martin, J. H., Blanchard, N., & Krishnaswamy, N. (2024). Multimodal Cross-Document Event Coreference Resolution Using Linear Semantic Transfer and Mixed-Modality Ensembles. In Proceedings of the 2024 Joint International Conference on Computational Linguistics. [DOI]

Khebour, I., Lai, K., Bradford, M., Zhu, Y., Brutti, R., Tam, C., Tu, J., Ibarra, B., & Blanchard, N. (2024). Common Ground Tracking in Multimodal Dialogue. arXiv preprint arXiv:2403.17284. [DOI]

Venkatesha, V., Nath, A., Khebour, I., Chelle, A., Bradford, M., Tu, J., & Blanchard, N. (2024). Propositional Extraction from Natural Speech in Small Group Collaborative Tasks. In Proceedings of the 17th International Conference on Educational Data Mining. [DOI]

Bradford, M., Khebour, I., VanderHoeven,H., Venkatesha, V., Blanchard, N., Krishnaswamy, N. (2025). Tracking Individual Beliefs in Co-Situated Groups Using Multimodal Input. In International Conference on Human-Computer Interaction.