Recent and Current Projects

2021 - Present

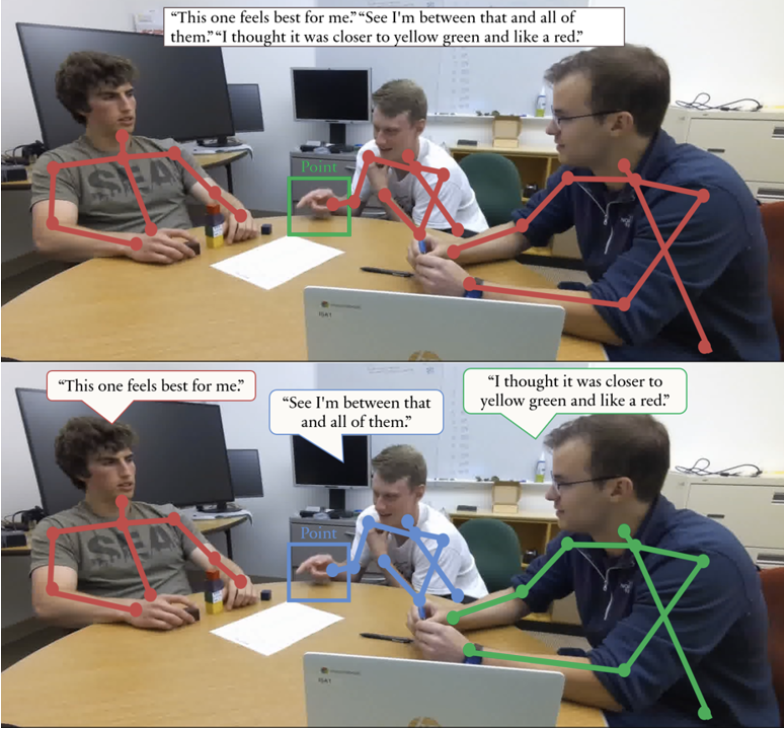

We study how humans and AI systems can work together effectively in small-group settings, focusing on shared understanding, belief tracking, and collaborative problem-solving.

2022 - Present

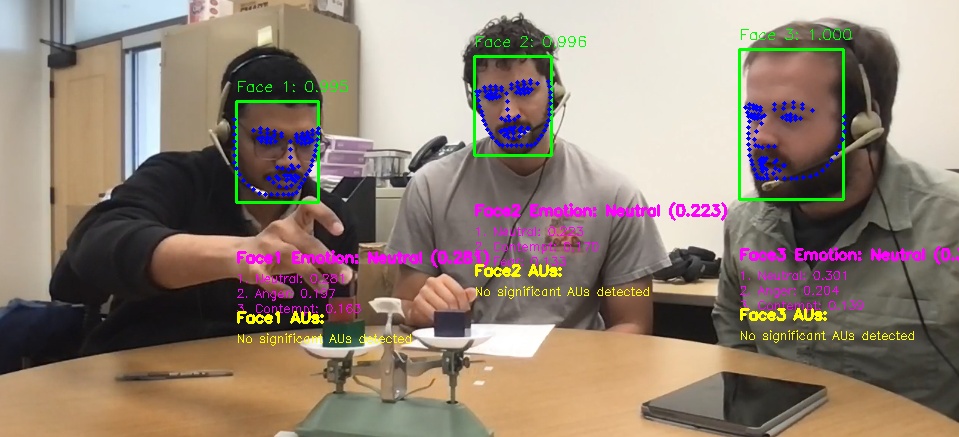

We explore how to detect, interpret, and model human affective and cognitive states using multimodal data, including facial expressions, physiological signals, and behavioral cues.

Summer 2022 - Fall 2024

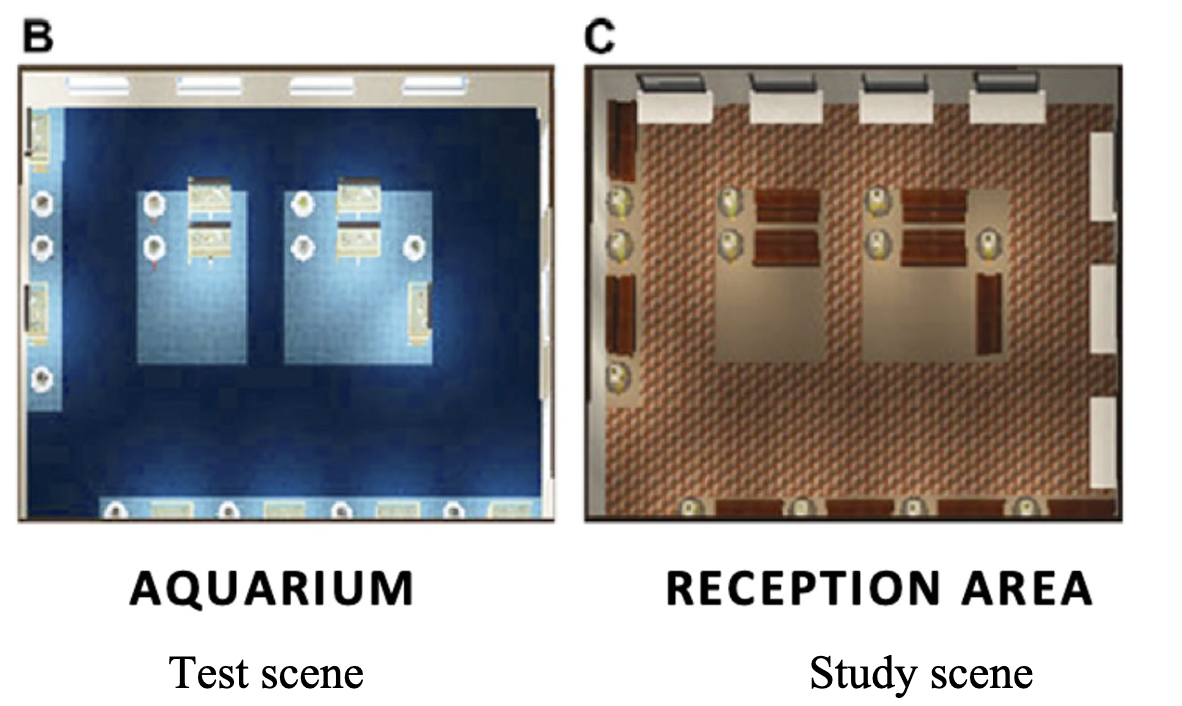

We investigate the use of computer vision in extended reality (XR) contexts to enhance interactivity, perception, and user modeling in virtual and augmented environments.

Summer 2022 - Fall 2024

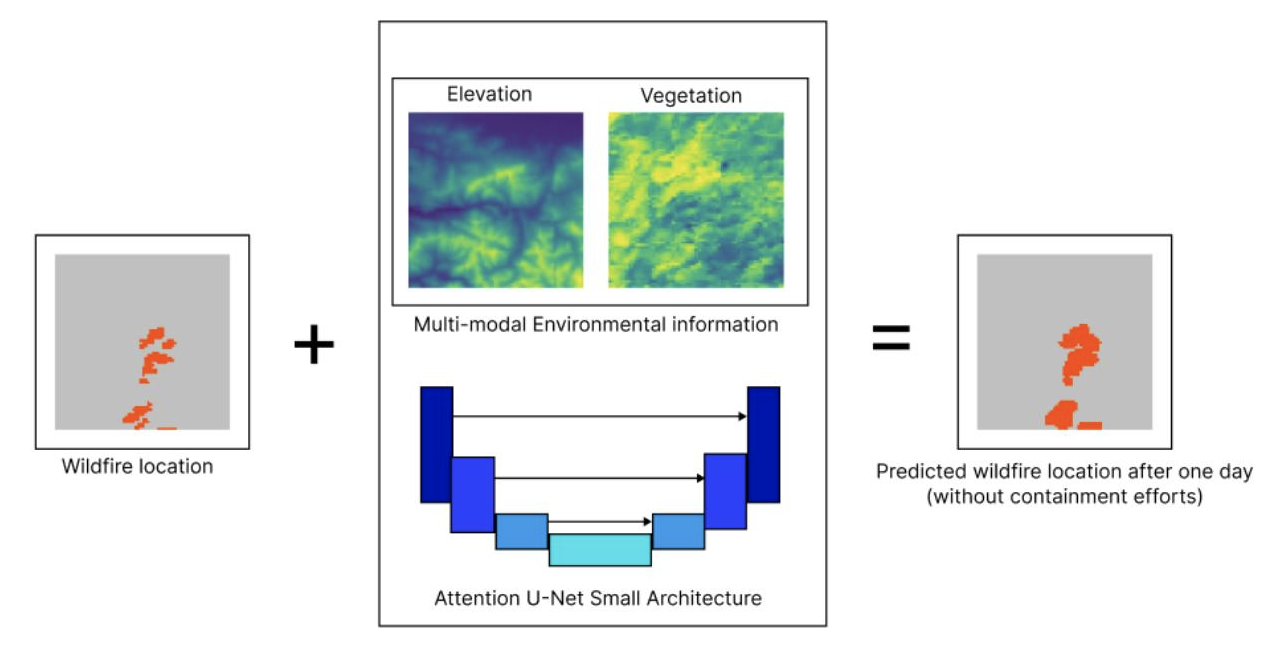

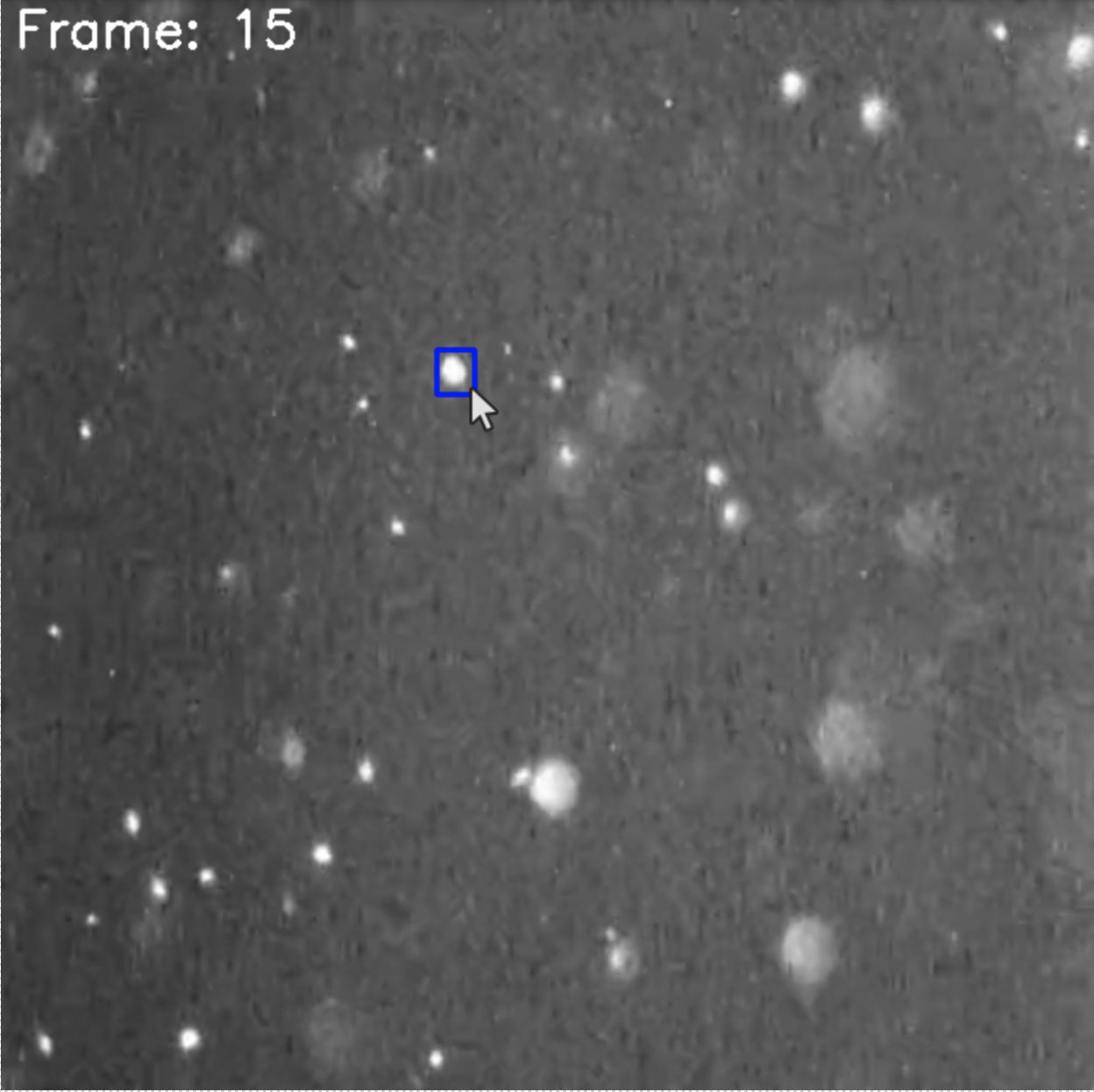

We apply computer vision techniques to remote sensing and environmental data to address challenges in wildfire prediction.

Summer 2022 - Fall 2024

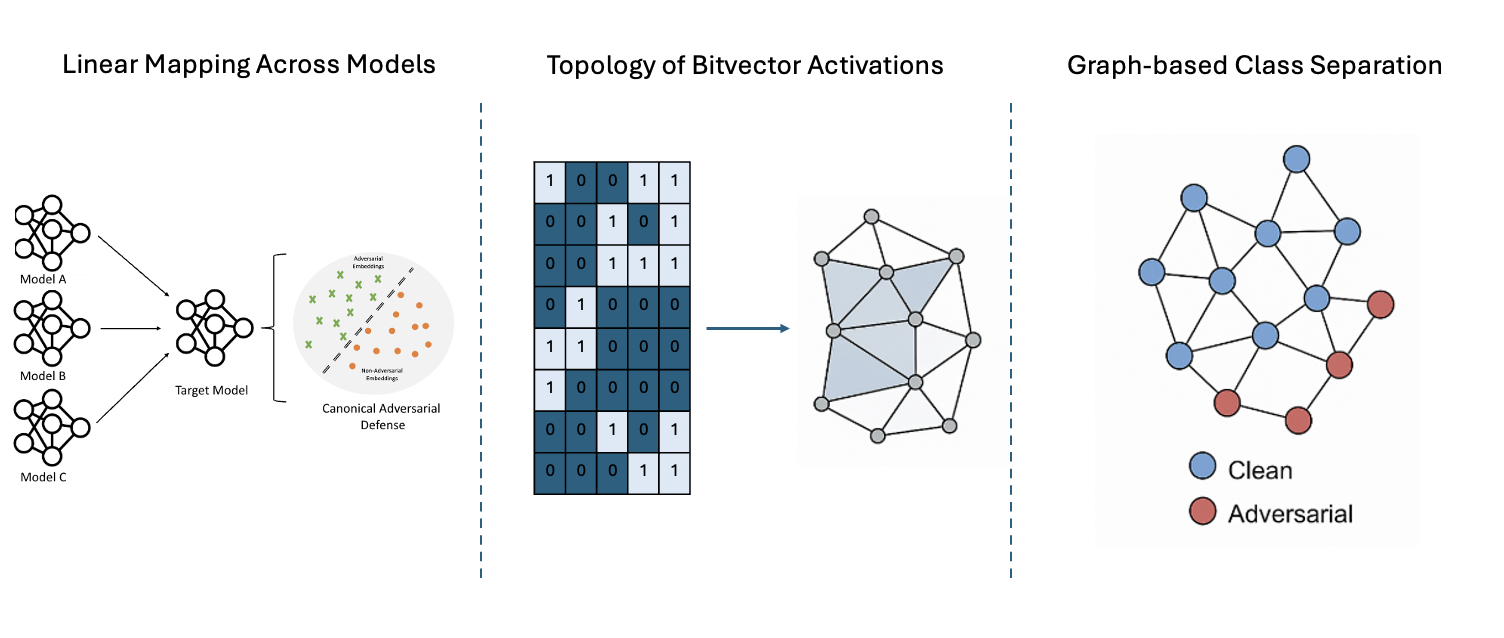

We aim to understand and improve the internal representations and robustness of deep learning models.

Summer 2022 - Fall 2024

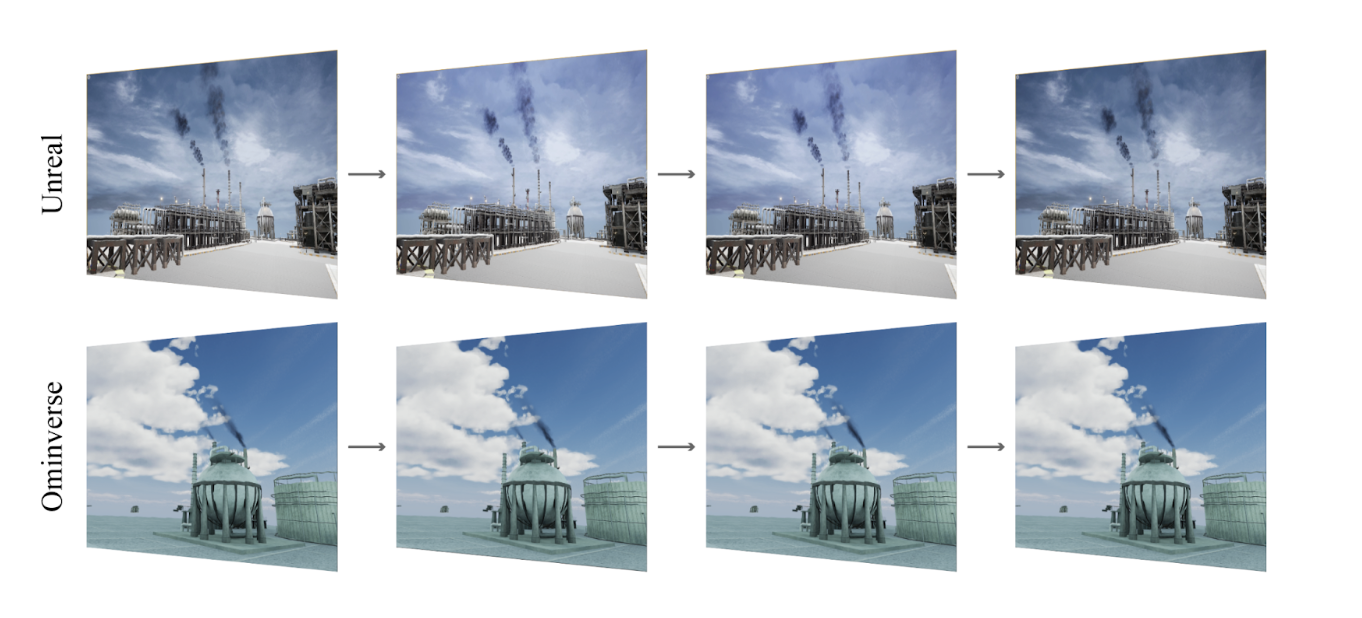

We explore the use of computer vision technologies in industrial settings, focusing on real-time detection, monitoring, and analysis to improve efficiency, safety, and decision-making.

Summer 2022 - Fall 2024

We apply computer vision techniques to enhance educational experiences.

Summer 2022 - Fall 2024

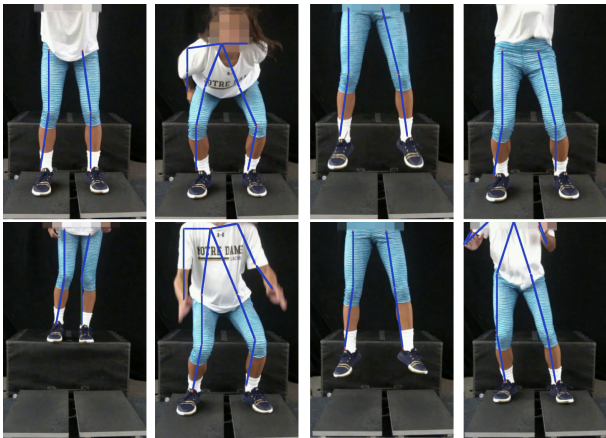

We aim to advance omputer vision applications in sports science, aiming to make athlete performance evaluation more accessible and accurate.