Computer Vision in Immersive and XR environments

Description:

Our lab explores cutting-edge computer vision (CV) techniques in immersive and extended reality (XR) environments to enhance accessibility, engagement, and learning outcomes. From enabling sound-based color perception for users with visual impairments in VR to evaluating the effectiveness of immersive task-based language learning, we design and test multimodal systems that push the boundaries of perceptual and educational interaction. By integrating CV with real-time user feedback and task performance, we aim to make XR environments more adaptive, inclusive, and cognitively enriching. We are also investigating how CV can detect users’ familiarity with environments or tasks, enabling personalized support and dynamic content adaptation in XR settings.

Publications:

Seefried, E., Bahny, J., Jung, C., Venkatesha, V., Bradford, M., Blanchard, N., & Krishnaswamy, N. (2024). Perceiving and Learning Color as Sound in Virtual Reality. In 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct. [DOI]

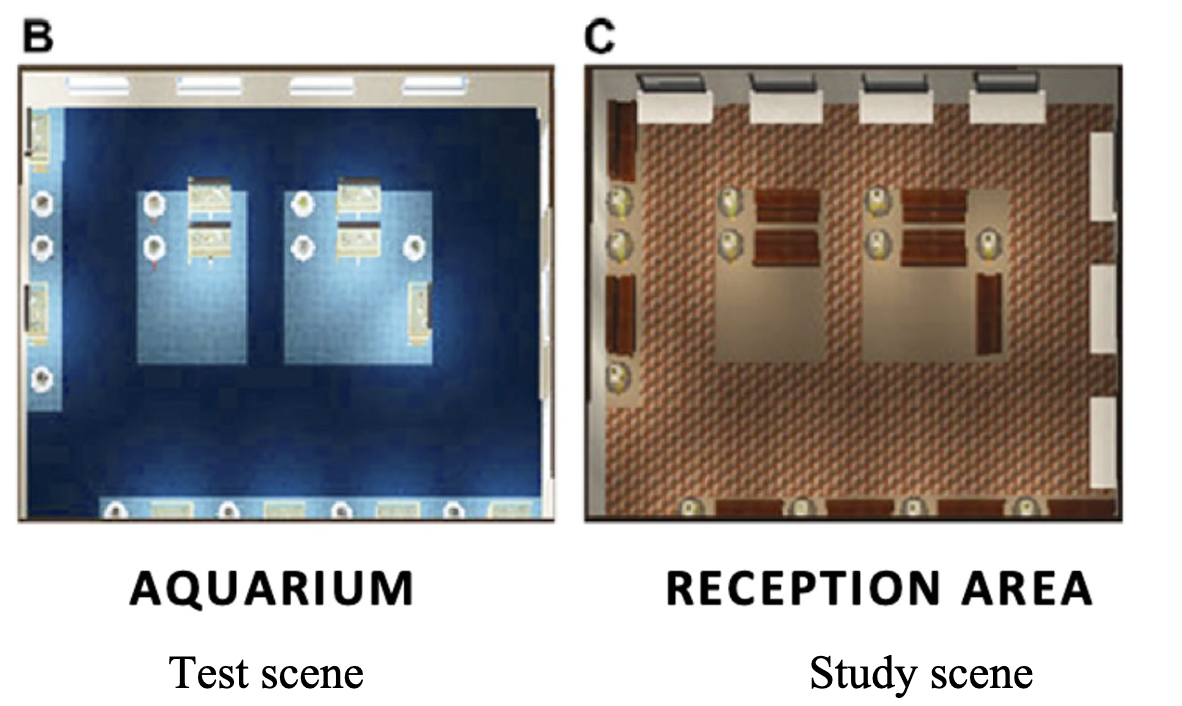

Chartier, T., Castillon, I., Venkatesha, V., Cleary, M., & Blanchard, N. T. (2024). Using Eye Gaze to Differentiate Internal Feelings of Familiarity in Virtual Reality Environments: Challenges and Opportunities. In 27th Annual CyberPsychology, CyberTherapy & Social Networking Conference. [DOI]

Bradford, M., Seefried, E., Krishnaswamy, N., & Blanchard, N. (2024). Thematic Analysis of Foreign Language Learning in a Virtual Environment. In 27th Annual CyberPsychology, CyberTherapy & Social Networking Conference. [DOI]